Coordination and Collaborative Creativity in Music Ensembles (CoCreate)

| Project number: | FWF P 29427 |

| Project leader: | Dr. Laura Bishop |

| Research facility: | Austrian Research Institute for Artificial Intelligence Vienna |

| National research partner: | Assoc. Prof. Dr. Werner Goebl Institute of Music Acoustics (IWK) University of Music and Performing Arts Vienna |

| Date of approval: | 27.06.2016 |

| Project start: | 01.09.2016 |

| Project end: | 30.09.2019 |

| Project website: | http://ofai.at/research/impml/projects/cocreate.html |

Abstract

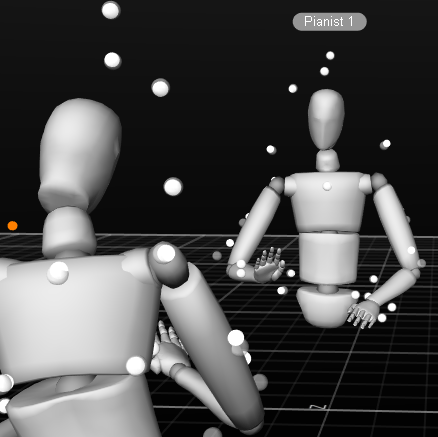

Ensemble musicians must coordinate their playing with high precision to produce a coherent performance. To achieve good coordination, they must be able to predict their co-performers` upcoming actions. Most musicians value originality in their playing, however, and aim to produce unique performances. These conflicting goals raise an interesting question: how do ensemble musicians balance their desire to sound unique with the need to remain predictable to each other? Playing music is an inherently creative task, as it requires the creative interpretation of existing music, if not the creation of new music altogether. This project investigates how ensembles coordinate when playing music that is ambiguous, and therefore places high demands on creative thinking (e.g. improvised music or notated music with a free rhythm). We consider both human-human and human- computer collaborations so that we can test how factors normally present in live human interaction (e.g. the opportunity for communication; the perception of co-performers as in charge of their own actions) affect creative collaboration. Three main questions are addressed: 1) how performers predict each other`s intentions, 2) how performers align their interpretations, and 3) whether musicians perceive computer-controlled partners as less creative or less intentional (i.e. not in charge of their actions) than human partners, causing them to perform differently with computer partners than with human partners. To address these questions, we will run a series of studies in which highly-skilled musicians perform as duos. Two studies will investigate how visual communication contributes to ensemble coordination. For these studies we will use motion capture and eye-tracking techniques, which will allow us to measure performers` movements and simultaneously determine where they are looking. In another study, we will investigate how musicians merge their interpretations, by comparing their solo and duo performances of the same pieces. A fourth study will compare human-human performances with human-computer performances to determine whether people play differently with computers than they play with other musicians, and if so, why. In this final study, a novel accompaniment system recently developed by our group will act as the computer partner for human pianists. This system `listens` to pianists and synchronises its accompaniment with their playing using a timing model, which predicts the timing of upcoming notes based on the timing of previous notes. In the current project, we will extend the accompaniment system so that it can communicate visually through the movements of an avatar head. This project will be the first systematic investigation of how musical creativity is achieved in groups. Guidelines will be generated for systems that enable creative musical collaboration between people and computers, and the upgrades made to the accompaniment system will transform it into a valuable tool for both researchers and music performers.