Synchronization and Communication in Music Ensembles

Research project funded by the Austrian Science Fund (FWF) P 24546-N15, 2012–2015,

carried out at the Austrian Research Institute for Artificial Intelligence (OFAI),

and headed by Werner Goebl (PI).

Project summary

Communicating, coordinating, and synchronizing thoughts and actions with one another is a fundamental faculty of human beings. Ensemble music performance represents a particular challenge to this ability, because movements and sounds have to be synchronized with highest precision while tempo and other expressive parameters vary permanently over time. The bulk of this world's music is performed by more than one person, resulting in a wealth of possible combinations: a piano duo for four hands, a classical string quartet, a spontaneously improvising Jazz combo, or a symphony orchestra with choir and soloists, just to name a few. Every possible combination yields its own characteristic dynamics of interpersonal communication. From the democratic organization of small ensembles, in which one musician takes the lead at one moment only to be led by others at the next, the range extends to more hierarchical organizations where many have to follow the sounds or the gestures of one (soloist versus accompanists, conductor versus orchestra).

This research project investigates interpersonal synchronization in small music ensembles and the role of gestural communication among the ensemble members. The guiding vision is to understand the underlying mechanisms and learning processes of musical synchronization at a level of detail that can be implemented into computational models that operate in real time. Such real-time frameworks will be employed for interactive experimentation with humans: musicians co-perform jointly with computational models of which the behavioral characteristics are controlled and manipulated (reactivity, disposition to follow or lead, attentional focus to particular ensemble members, etc.). Further experimentation focuses on the motion kinematics in communicative gestures of ensemble members (e.g., pick-up head movements) and the evolution of a common musical goal of each musician over multiple sessions of rehearsal.

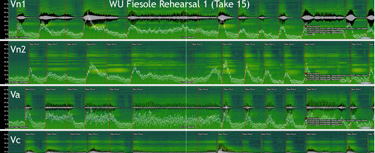

To measure these complex phenomena, hybrid pianos are combined with optical capturing systems to record the individual performance and the movements of the musicians playing together. Data collected from both real-world performances and controlled laboratory experiments involving both student and expert musicians will be subjected to different modeling approaches (such as dynamical systems or machine learning) to understand the dynamics and mechanisms of interpersonal music making.

Applications of this research may emerge for educational settings where computational visualization tools could help to enhance the awareness of movement and sound synchrony among musicians, new performance interfaces for computer music and dislocated interaction, or intelligent accompaniment systems that not only react, but act as full musical companions, even understanding visually the gestures of their human partners.